Your new post is loading...

Your new post is loading...

By Sean Rubinsztein-Dunlop, Echo Hui, Sarah Curnow and Kevin Nguyen "The highly sensitive information of millions of Australians — including logins for personal Australian Tax Office accounts, medical and personal data of thousands of NDIS recipients, and confidential details of an alleged assault of a Victorian school student by their teacher — is among terabytes of hacked data being openly traded online. An ABC investigation has identified large swathes of previously unreported confidential material that is widely available on the internet, ranging from sensitive legal contracts to the login details of individual MyGov accounts, which are being sold for as little as $1 USD. The huge volume of newly identified information confirms the high-profile hacks of Medibank and Optus represent just a fraction of the confidential Australian records recently stolen by cyber criminals. At least 12 million Australians have had their data exposed by hackers in recent months. It can also be revealed many of those impacted learnt they were victims of data theft only after being contacted by the ABC. They said they were either not adequately notified by the organisations responsible for securing their data, or were misled as to the gravity of the breach. The highly sensitive information of millions of Australians — including logins for personal Australian Tax Office accounts, medical and personal data of thousands of NDIS recipients, and confidential details of an alleged assault of a Victorian school student by their teacher — is among terabytes... One of the main hubs where stolen data is published is a forum easily discoverable through Google, which only appeared eight months ago and has soared in popularity — much to the alarm of global cyber intelligence experts. Anonymous users on the forum and similar websites regularly hawk stolen databases collectively containing millions of Australians' personal information. Others were seen offering generous incentives to those daring enough to go after specific targets, such as one post seeking classified intelligence on the development of Australian submarines. "There's a criminal's cornucopia of information available on the clear web, which is the web that's indexed by Google, as well as in the dark web," said CyberCX director of cyber intelligence Katherine Mansted. "There's a very low barrier of entry for criminals … and often what we see with foreign government espionage or cyber programs — they're not above buying tools or buying information from criminals either." In one case, law student Zac's medical information, pilfered in one of Australia's most troubling cyber breaches, was freely published by someone without a clear motive. Zac has a rare neuromuscular disorder which has left him unable to walk and prone to severe weakness and fatigue. The ABC has agreed not to use his full name because he fears the stolen information could be used to locate him. His sensitive personal data was stolen in May in a cyber attack on CTARS, a company that provides a cloud-based client management system to National Disability Insurance Scheme (NDIS) and NSW out-of-home-care service providers. The National Disability Insurance Agency (NDIA), which is responsible for the NDIS, told a Senate committee it had confirmed with CTARS that all 9,800 affected participants had been notified. But ABC Investigations has established this is not the case. The ABC spoke with 20 victims of the breach, all but one — who later found a notice in her junk mail — said they had not received a notification or even heard of the hack. The leaked CTARS database, verified by the ABC, included Medicare numbers, medical information, tax file numbers, prescription records, mental health diagnoses, welfare checks, and observations about high-risk behaviour such as eating disorders, self-harm and suicide attempts. "It's really, really violating," said Zac, whose leaked data included severe allergy listings for common food and medicine, "I may not like to think of myself as vulnerable … but I guess I am quite vulnerable, particularly living alone. "Allergy records, things that are really sensitive, [are kept] private between me and my doctor and no one else but the people who support me. "That's not the sort of information that you want getting into the wrong hands, particularly when ... you don't have a lot of people around you to advocate for you." The CTARS database is just one of many thousands being traded on the ever-growing cybercrime black market. These postings appear on both the clear web — used everyday through common web browsers — and on the dark web which requires special software for access. The scale of the problem is illustrated by the low prices being demanded for confidential data. ABC Investigations found users selling personal information and log-in credentials to individual Australian accounts which included MyGov, the ATO and Virgin Money for between $1 to $10 USD. MyGov and ATO services are built with two-factor authentication, which protects accounts with compromised usernames and passwords, but those same login details could be used as a means to bypass less-secure services. One cyber intelligence expert showed the ABC a popular hackers forum, in which remote access to an Australian manufacturing company was auctioned for up to $500. He declined to identify the company. CyberCX's Ms Mansted said the "black economy" in stolen data and hacking services was by some measures the third largest economy in the world, surpassed only by the US and Chinese GDP. "The cost of buying a person's personal information or buying access to hack into a corporation, that's actually declining over time, because there is so much information and so much data out there," said Ms Mansted. Cyber threat investigator Paul Nevin monitors online forums where hundreds of Australians' login data are traded each week. "The volume of them was staggering to me," said Mr Nevin, whose company Cybermerc runs surveillance on malicious actors and trains Australian defence officials. "In the past, we'd see small scatterings of accounts but now, this whole marketplace has been commoditised and fully automated. "The development of that capability has only been around for a few years but it shows you just how successful these actors are at what they do." Explosive details leaked about private school

The cyber attack on Medibank last month by Russian criminal group REvil brought home the devastation cyber crime can inflict.

The largest health insurer in the country is now facing a possible class action lawsuit after REvil accessed the data of 9.7 million current and former customers, and published highly sensitive medical information online. On the dark web, Russian and Eastern European criminal organisations host sites where they post ransom threats and later leak databases if the ransom is not paid. The groups research their targets to inflict maximum damage. Victims range from global corporations, including defence firm Thales and consulting company Accenture, to Australian schools. In Melbourne, the Kilvington Grammar School community is reeling after more than 1,000 current and former students had their personal data leaked in October by a prolific ransomware gang, Lockbit 3.0. The independent school informed parents via emails, including one on November 2 that stated an "unknown third party has published a limited amount of data taken from our systems".

Correspondence sent to parents indicated this "sensitive information" included contact details of parents, Medicare details and health information such as allergies, as well as some credit card information.

However, the cache of information actually published by Lockbit 3.0 was far more extensive than initially suggested.

ABC Investigations can reveal the ransomware group published highly confidential documents containing the bank account numbers of parents, legal and debt disputes between the school and families, report cards, and individual test results.

Most shocking was the publication of details concerning the investigation into a teacher accused of assaulting a child and privileged legal advice about the death of a student. Kilvington Grammar has been at the centre of a coronial inquest into Lachlan Cook, 16, who died after suffering complications of Type 1 diabetes during a school trip to Vietnam in 2019. Lachlan became critically ill and started vomiting, which was mistaken for gastroenteritis rather than a rare complication of his diabetes. The coroner has indicated she will find the death was preventable because neither the school nor the tour operator, World Challenge, provided specific care for the teenager's diabetes. Lachlan's parents declined to comment, but ABC Investigations understands they did not receive notification from the school that sensitive legal documents about his death were stolen and published online. Other parents whose details were compromised told the ABC they were frustrated by the school's failure to explain the scale of the breach. "That's distressing that this type of data has been accessed," said father of two, Paul Papadopoulos. "It's absolutely more sensitive [than parents were told] and I think any person would want to have known about it." In a statement to the ABC, Kilvington Grammar did not address specific questions about the Cook family tragedy nor if any ransom was demanded or paid. The school's marketing director Camilla Fiorini acknowledged its attempt to notify families of the specifics of what personal data was stolen was an "imperfect process". "We have adopted a conservative approach and contacted all families that may have been impacted," she said. "We listed — to the best of our abilities — what data had been accessed ... we also suggested additional steps those individuals can consider taking to further protect their information. "The school is deeply distressed by this incident and the impact it has had on our community." Other Australian organisations recently targeted by Lockbit 3.0 included a law firm, a wealth management firm for high-net-worth individuals, and a major hospitality company. Blame game leaves victims out in the cold The failure of Kilvington Grammar to properly notify the victims of the data-theft is not an isolated case and its targeting by a ransomware group is emblematic of a growing apparatus commoditising stolen personal information. Australian Federal Police (AFP) Cybercrime Operations Commander Chris Goldsmid, told the ABC personal data was becoming "increasingly valuable to cybercriminals who see it as information they can exploit for financial gain". "Cybercriminals can now operate at all levels of technical ability and the tools they employ are easily accessible online," he warned. He added the number of cybercrime incidents has risen 13 per cent from the previous financial year, to 67,500 reports — likely a conservative figure. "We suspect there are many more victims but they are too embarrassed to come forward, or they have not realised what has happened to them is a crime," Commander Goldsmid said. While authorities and the Federal Government have warned Medibank customers to be on high-alert for identity thieves, many other Australians are unaware they are victims. Under the Privacy Act, all government agencies, organisations that hold health information and companies with an annual turnover above $3 million are required to notify individuals when their data has been breached if it is deemed "likely to cause serious harm". After CTARS was hacked in May, the company published a statement about the hack on its website but devolved its responsibility to inform its NDIS recipients to 67 individual service providers affected by the breach. When ABC Investigations asked CTARS why many of the impacted NDIS recipients were not notified, it said it decided the processes was best handled by each provider. "The OAIC [Office of the Australian Information Commissioner] suggests that notifications are usually best received from the organisation who has a relationship with impacted individuals — in this case, the service providers," a CTARS spokesperson said. "CTARS worked extensively to support the service providers in being able to ... bring the notification to their clients' attention." However, the NDIA told the ABC this responsibility lay not with those individual providers, but with CTARS. "The Agency's engagement with CTARS following the breach, indicated that CTARS was fulfilling all its obligations under the Privacy Act in relation to the breach," an NDIA spokesperson said. "The Agency has reinforced with CTARS its obligation to inform users of their services." This has provided little comfort to Zac and other CTARS victims whose personal information may never be erased from the internet. "It's infuriating, it's shocking and it's disturbing," said Zac. "It makes me really angry to know that multiple government agencies and these private support companies, who I would have thought would be duty bound to hold my best interests at heart … especially when my safety is at risk … that they at no level attempted to get in contact with me and assist me in protecting my information." Zac's former service provider, Southern Cross Support Services, did not respond to the ABC's questions. A victim of another hack published on the same forum as the CTARS data is Karen Heath. The Victorian woman has been the victim of two hacks in the past month, one of Optus' customer data and another of confidential information stored by MyDeal, which is owned by retail giant Woolworths Group. Woolworths told the ABC it has "enhanced" its security and privacy practices operations since the MyDeal hack and it "unreservedly apologise[d] for the considerable concern the MyDeal breach has caused". But Ms Heath remains anxious. "You feel a bit helpless [and] you get worried about it," Ms Heath said. "I don't even know that I'll shop at Woolworths again ... they own MyDeal. They have insurance companies, they have all sorts of things. "So where does it end?" For original post, please visit: https://amp.abc.net.au/article/101700974

By Earl Aguilera and Roberto de Roock https://doi.org/10.1093/acrefore/9780190264093.013.1438 Summary

"As contemporary societies continue to integrate digital technologies into varying aspects of everyday life—including work, schooling, and play—the concept of digital game-based learning (DGBL) has become increasingly influential. The term DGBL is often used to characterize the relationship of computer-based games (including games played on dedicated gaming consoles and mobile devices) to various learning processes or outcomes. The concept of DGBL has its origins in interdisciplinary research across the computational and social sciences, as well as the humanities. As interest in computer games and learning within the field of education began to expand in the late 20th century, DGBL became somewhat of a contested term. Even foundational concepts such as the definition of games (as well as their relationship to simulations and similar artifacts), the affordances of digital modalities, and the question of what “counts” as learning continue to spark debate among positivist, interpretivist, and critical framings of DGBL. Other contested areas include the ways that DGBL should be assessed, the role of motivation in DGBL, and the specific frameworks that should inform the design of games for learning.

Scholarship representing a more positivist view of DGBL typically explores the potential of digital games as motivators and influencers of human behavior, leading to the development of concepts such as gamification and other uses of games for achieving specified outcomes, such as increasing academic measures of performance, or as a form of behavioral modification. Other researchers have taken a more interpretive view of DGBL, framing it as a way to understand learning, meaning-making, and play as social practices embedded within broader contexts, both local and historical. Still others approach DGBL through a more critical paradigm, interrogating issues of power, agency, and ideology within and across applications of DGBL. Within classrooms and formal settings, educators have adopted four broad approaches to applying DGBL: (a) integrating commercial games into classroom learning; (b) developing games expressly for the purpose of teaching educational content; (c) involving students in the creation of digital games as a vehicle for learning; and (d) integrating elements such as scoreboards, feedback loops, and reward systems derived from digital games into non-game contexts—also referred to as gamification.

Scholarship on DGBL focusing on informal settings has alternatively highlighted the socially situated, interpretive practices of gamers; the role of affinity spaces and participatory cultures; and the intersection of gaming practices with the lifeworlds of game players.As DGBL has continued to demonstrate influence on a variety of fields, it has also attracted criticism. Among these critiques are the question of the relative effectiveness of DGBL for achieving educational outcomes. Critiques of the quality and design of educational games have also been raised by educators, designers, and gamers alike. Interpretive scholars have tended to question the primacy of institutionally defined approaches to DGBL, highlighting instead the importance of understanding how people make meaning through and with games beyond formal schooling. Critical scholars have also identified issues in the ethics of DGBL in general and gamification in particular as a form of behavior modification and social control. These critiques often intersect and overlap with criticism of video games in general, including issues of commercialism, antisocial behaviors, misogyny, addiction, and the promotion of violence. Despite these criticisms, research and applications of DGBL continue to expand within and beyond the field of education, and evolving technologies, social practices, and cultural developments continue to open new avenues of exploration in the area." To access original article, please visit:

https://doi.org/10.1093/acrefore/9780190264093.013.1438

By Joel Schwarz and Emily Cherkin

The Federal Trade Commission (FTC) recently issued a policy statement about the application of the Children’s Online Privacy Protection Act (COPPA) to Ed Tech providers, warning that they can only use student personally identifiable information (PII) collected with school consent for the benefit of the school, and that they cannot retain it for longer than required to meet the purpose of collection.

Ironically, days later, a Human Rights Watch investigative report observed that almost 90 percent of Ed Tech products it reviewed “appeared to engage in data practices that put children’s rights at risk.”

These revelations are no surprise to children’s privacy advocacy groups like the Student Data Privacy Project. But in the midst of a COVID-fog, much like the fog of war, Ed Tech remained largely insulated from scrutiny, siphoning student PII with impunity. Taking a step back, it’s important to understand how Ed Tech providers access and collect this information. In 1974, the Family Educational Rights and Privacy Act (FERPA) was passed to protect school-held PII, such as that found in student directories. But FERPA contains a “School Official Exception” that allows schools to disclose children’s PII without parental consent so long as it’s disclosed for a “legitimate educational interest” and the school maintains “direct control” over the provider.

In 1974, it was easy to maintain direct control over entities because there was no internet.

Today, schools increasingly rely on Ed Tech platforms to provide digital learning, pursuant to an electronically signed agreement, hosted by a nameless/faceless server, somewhere in the ether. Yet the law has barely changed since 1974. For example, the Department of Education (DOE) maintains that direct control can be established through use of a contract between the parties, despite the fact that online contracts and Terms of Service are often take-it-or-leave-it propositions that favor online services. In law, we called these “contracts of adhesion.” In Ed Tech advocacy, we call them data free-for-alls. Given these concerns, in 2021 the Student Data Privacy Project (SDPP) helped parents from North Carolina to Alaska file access requests with their children’s schools under a FERPA provision mandating that schools provide parents access to their children’s PII. Most parents received nothing. Many schools seemed unable to get their Ed Tech providers to respond, and other schools didn’t know how to make the request of the provider.

One Minnesota parent received over 2,000 files, revealing a disturbing amount of personal information held by EdTech. How might this data be used to profile this child? And how does this comport with the FTC’s warning about retaining information only for as long as needed to fulfill the purpose of collection? Despite this isolated example, most parents failed to receive a comprehensive response. As such, SDPP worked with parents to file complaints with the DOE in July 2021. As the one-year anniversary of these complaints draws near, however, the DOE has taken no substantive action. Ironically, in cases where the DOE sent copies of the parent’s complaint to the affected school district, the school’s response only bolstered concerns. One Alaska school district misapplied a Supreme Court case dealing with FERPA, asserting that “data gathered by technology vendors is not ‘educational records’ under FERPA” because the Ed Tech records are not “centrally stored” by the school. Ironically, that school attached its FERPA addendum to that same letter, which explicitly states that it “includes all data specifically protected by FERPA, including student education records, in any form.”

Unfortunately, this is indicative of widespread confusion by schools about applying FERPA to Ed Tech. Yet parents have few options for holding Ed Tech providers accountable. Parents can’t sue Ed Tech because the schools have the direct contractual relationship. Parents can’t directly enforce FERPA because FERPA doesn’t offer a private right of action. Even state privacy laws are of little help when consent for sharing is given — and FERPA allows schools to consent on parents’ behalf. There is some cause for hope. For example, President Biden’s March 1 State of the Union speech challenged Congress to strengthen children’s privacy protections “by banning online platforms from excessive data collection and targeted advertising for children.” And in January, Rep. Tom Emmer (R-Minn.) sent DOE a letter inquiring about the SDPP parent complaints. Most recently, we have the FTC’s warning to Ed Tech about protecting student data privacy. Beyond that, however, we’ve seen little progress, or action, by the government. So here are three things that need to happen to hold Ed Tech accountable:

- The FTC needs to enforce COPPA obligations on Ed Tech providers.

- The DOE must enforce FERPA, compelling schools to hold Ed Tech vendors accountable.

- Congress must update FERPA for the realities of the 21st century.

A 50th Anniversary is always a big occasion in a relationship, warranting a grand gesture to renew the commitment. So what better gesture for the 50th anniversary of FERPA in 2024 than for the government to renew its commitment to protecting the privacy of nearly 50 million students by enforcing the law and closing the gaps that have allowed Ed Tech providers to exploit children’s PII for their own profit, without oversight or accountability?" To view original post, please visit: https://thehill.com/opinion/cybersecurity/3586011-student-privacy-laws-remain-the-same-but-children-are-now-the-product/

"Colleges and universities experienced a surge in ransomware attacks in 2021, and those attacks had significant operational and financial costs, according to a new report." By Susan D'Agostino “You can collect that money in a couple of hours,” a ransomware hacker’s representative wrote in a secure June 2020 chat with a University of California, San Francisco, negotiator about the $3 million ransom demanded. “You need to take us seriously. If we’ll release on our blog student records/data, I’m 100% sure you will lose more than our price what we ask.”

The university later paid $1.14 million to gain access to the decryption key. Colleges and universities worldwide experienced a surge in ransomware attacks in 2021, and those attacks had significant operational and financial costs, according to a new report from Sophos, a global cybersecurity leader. The survey included 5,600 IT professionals, including 410 from higher education, across 31 countries. Though most of the education victims succeeded in retrieving some of their data, few retrieved all of it, even after paying the ransom. “The nature of the academic community is very collegial and collaborative,” said Richard Forno, assistant director of the University of Maryland Baltimore County Center for Cybersecurity. “There’s a very fine line that universities and colleges have to walk between facilitating academic research and education and maintaining strong security.”

That propensity of colleges to share openly and widely can make the institutions susceptible to attacks. Nearly three-quarters (74 percent) of ransomware attacks on higher ed institutions succeeded. Hackers’ efforts in other sectors were not as fruitful, including in business, health care and financial services, where respectively 68 percent, 61 percent and 57 percent of attacks succeeded. For this reason, cybercriminals may view colleges and universities as soft targets for ransomware attacks, given their above-average success rate in encrypting higher education institutions’ data.

Despite high-profile ransomware attacks such as one in 2020 that targeted UC San Francisco, higher ed institutions’ efforts to protect their networks continued to fall short in 2021."... For original post, please visit: https://www.insidehighered.com/news/2022/07/22/ransomware-attacks-against-higher-ed-increase

Published: July 14, 2022 9.43am EDT By Stephen J. Neville and Natalie Coulter, York University, Canada

"In many busy households around the world, it’s not uncommon for children to shout out directives to Apple’s Siri or Amazon’s Alexa. They may make a game out of asking the voice-activated personal assistant (VAPA) what time it is, or requesting a popular song. While this may seem like a mundane part of domestic life, there is much more going on. The VAPAs are continuously listening, recording and processing acoustic happenings in a process that has been dubbed “eavesmining,” a portmanteau of eavesdropping and datamining. This raises significant concerns pertaining to issues of privacy and surveillance, as well as discrimination, as the sonic traces of peoples’ lives become datafied and scrutinized by algorithms.

These concerns intensify as we apply them to children. Their data is accumulated over lifetimes in ways that go well beyond what was ever collected on their parents with far-reaching consequences that we haven’t even begun to understand. Always listening The adoption of VAPAs is proceeding at a staggering pace as it extends to include mobile phones, smart speakers and the ever-increasing number products that are connected to the internet. These include children’s digital toys, home security systems that listen for break-ins and smart doorbells that can pickup sidewalk conversations.

There are pressing issues that derive from the collection, storage and analysis of sonic data as they pertain to parents, youth and children. Alarms have been raised in the past — in 2014, privacy advocates raised concerns on how much the Amazon Echo was listening to, what data was being collected and how the data would be used by Amazon’s recommendation engines.

And yet, despite these concerns, VAPAs and other eavesmining systems have spread exponentially. Recent market research predicts that by 2024, the number of voice-activated devices will explode to over 8.4 billion. Recording more than just speech There is more being gathered than just uttered statements, as VAPAs and other eavesmining systems overhear personal features of voices that involuntarily reveal biometric and behavioural attributes such as age, gender, health, intoxication and personality. Information about acoustic environments (like a noisy apartment) or particular sonic events (like breaking glass) can also be gleaned through “auditory scene analysis” to make judgments about what is happening in that environment. Eavesmining systems already have a recent track record for collaborating with law enforcement agencies and being subpoenaed for data in criminal investigations. This raises concerns of other forms of surveillance creep and profiling of children and families. For example, smart speaker data may be used to create profiles such as “noisy households,” “disciplinary parenting styles” or “troubled youth.” This could, in the future, be used by governments to profile those reliant on social assistance or families in crisis with potentially dire consequences.

There are also new eavesmining systems presented as a solution to keep children safe called “aggression detectors.” These technologies consist of microphone systems loaded with machine learning software, dubiously claiming that they can help anticipate incidents of violence by listening for signs of raising volume and emotions in voices, and for other sounds such as glass breaking. Monitoring schools Aggression detectors are advertised in school safety magazines and at law enforcement conventions. They have been deployed in public spaces, hospitals and high schools under the guise of being able to pre-empt and detect mass shootings and other cases of lethal violence. But there are serious issues around the efficacy and reliability of these systems. One brand of detector repeatedly misinterpreted vocal cues of kids including coughing, screaming and cheering as indicators of aggression. This begs the question of who is being protected and who will be made less safe by its design. Some children and youth will be disproportionately harmed by this form of securitized listening, and the interests of all families will not be uniformly protected or served. A recurrent critique of voice-activated technology is that it reproduces cultural and racial biases by enforcing vocal norms and misrecognizing culturally diverse forms of speech in relation to language, accent, dialect and slang.

We can anticipate that the speech and voices of racialized children and youth will be disproportionately misinterpreted as aggressive sounding. This troubling prediction should come as no surprise as it follows the deeply entrenched colonial and white supremacist histories that consistently police a “sonic color line.”

Sound policy Eavesmining is a rich site of information and surveillance as children and families’ sonic activities have become valuable sources of data to be collected, monitored, stored, analysed and sold without the subject’s knowledge to thousands of third parties. These companies are profit-driven, with few ethical obligations to children and their data.

With no legal requirement to erase this data, the data accumulates over children’s lifetimes, potentially lasting forever. It is unknown how long and how far-reaching these digital traces will follow children as they age, how widespread this data will be shared or how much this data will be cross-referenced with other data. These questions have serious implications on children’s lives both presently and as they age.

There are a myriad threats posed by eavesmining in terms of privacy, surveillance and discrimination. Individualized recommendations, such as informational privacy education and digital literacy training, will be ineffective in addressing these problems and place too great a responsibility on families to develop the necessary literacies to counter eavesmining in public and private spaces.

We need to consider the advancement of a collective framework that combats the unique risks and realities of eavesmining. Perhaps the development of a Fair Listening Practice Principles — an auditory spin on the “Fair Information Practice Principles” — would help evaluate the platforms and processes that impact the sonic lives of children and families."... For full post, please visit: https://theconversation.com/amp/hey-siri-virtual-assistants-are-listening-to-children-and-then-using-the-data-186874

"We need much stricter controls on the brands and influencers that share photos of children on social media, according to a pediatric consultant."

By Michael Staines

"In a recent column for The Irish Examiner, Dr Niamh Lynch said we need to re-think how we use images of children on social media – calling for an end to what she called ‘digital child labour.’

She said children’s rights to privacy and safety were being breached without their consent, and often for financial gain.

On The Pat Kenny Show this morning, she said the article was in response to the rise in ‘sharenting’ and ‘mumfluencers.’ “Without picking one example - and that wouldn’t actually be fair because I think a bit of responsibility has to be taken by the social media companies themselves and by the companies that use these parents - but certainly there would be tales of children being clearly unhappy or tired or not in the mood and yet it has become their job to promote a product or endorse a product or whatever,” she said.

“These children are doing work and because they’re young, they can’t actually consent to that. Their privacy can sometimes be violated and there is a whole ethical minefield around it.” 'Digital child labour' She said Ireland needs tighter legislation to protect children’s rights and privacy – and to ensure there is total transparency about the money changing hands.

“People don’t realise that these children are working,” she said.

“These children are doing a job.

“It is a job that can at times compromise their safety. It is a job that compromises their privacy and it is certainly a job they are doing without any sort of consent.

“It is very different say with a child in an ad for a shopping centre or something like that. Where you see the face of the child, but you know nothing about them.

“These children, you know everything about them really in many cases.

“So yes, I would say there needs to be tighter legislation around it. It needs to be clear because very often it is presented within the sort of cushion of family life and the segue between what is family life and what is an ad isn’t always very clear.

“There needs to be more transparency really about transactions that go on in the background.” Privacy She said there is a major issue around child safety when so much person l information is being shared.

“The primary concern would be the safety of the child because once a child becomes recognisable separate to the parent then there’s the potential for them to become a bit of a target,” she said. “When you think about how much is shared about these children online, it is pretty easy to know who their siblings are, what their date of birth is, when they lost their last tooth, what their pet’s name is.

“There is a so much information out there about certain children and there are huge safety concerns around that then as well.” Legislation Dr Lynch said we won’t know the impact of many children for at least another decade; however, children that featured in early YouTube videos are already coming out and talking about what an “uncomfortable experience” it was for them.

“I think the parents themselves to a degree perhaps are also being exploited by large companies who are using them to use their child to promote products,” she said.

“So, I think large companies certainly need to take responsibility and perhaps we should call those companies out when we see that online.”

“The social media companies really should tighten up as well.” For audio interview and full post, please visit:

California's Largest District & Riverside County Add Nearly 1 Million To the Number of Students Whose Private Data Was Stolen From Illuminate

By Kristal Kuykendall "The breach of student data that occurred during a January 2022 cyberattack targeting Illuminate Education’s systems is now known to have impacted the nation’s second-largest school district, Los Angeles Unified with 430,000 students, which has notified state officials along with 24 other districts in California and one in Washington state.

The data breach notifications posted on the California Attorney General’s website in the past week by LAUSD, Ceres Unified School District with 14,000 students, and Riverside County Office of Education representing 23 districts and 431,000 students, mean that Illuminate Education’s data breach leaked the private information of well over 3 million students — and potentially several times that total.

The vast reach of the data breach will likely never be fully known because most state laws do not require public disclosure of data breaches; Illuminate has said in a statement that the data of current and former students was compromised at the impacted schools but declined to specify the total number of students impacted in multiple email communications with THE Journal. The estimated total of 3 million is based on New York State Department of Education official estimates that “at least 2 million” statewide were impacted, plus the current enrollment figures of the other districts that have since disclosed their student data was also breached by Illuminate.

California requires a notice of a data breach to be posted on the attorney general’s website, but the notices do not include any details such as what data was stolen, nor the number of students affected; the same is true in Washington, where Impact Public Schools in South Puget Sound notified the state attorney general this week that its students were among those impacted by the Illuminate incident.

Oklahoma City Public Schools on May 13 added its 34,000 students to the ever-growing list of those impacted by the Illuminate Education data breach; thus far, it is the only district in Oklahoma known to have been among the hundreds of K–12 schools and districts across the country whose private student data was compromised while stored within Illuminate’s systems. Oklahoma has no statewide public disclosure requirements, so it’s left up to local districts to decide whether and how to notify parents in the event of a breach of student data, Oklahoma Department of Education officials told THE Journal recently.

In Colorado, where nine districts have publicly disclosed that the Illuminate breach included the data of their combined 140,000 students, there is no legal mandate for school districts nor ed tech vendors to notify state education officials when student data is breached, Colorado Department of Education Director of Communications Jeremy Meyer told THE Journal. State law does not require student data to be encrypted, he said, and CDE has no authority to collect data on nor investigate data breaches. Colorado’s Student Data Transparency and Security Act, passed in 2016, goes no further than “strongly urging” local districts to stop using ed tech vendors who leak or otherwise compromise student data.

Most of the notifications shared by districts included in the breach have simply shared a template letter, or portions of it, signed by Illuminate Education. It states that Social Security numbers were not part of the private information that was stolen during the cyberattack.

Notification letters shared by impacted districts have stated that the compromised data included student names, academic and behavioral records, enrollment data, disability accommodation information, special education status, demographic data, and in some cases the students’ reduced-price or free lunch status.

Illuminate has told THE Journal that the breach was discovered after it began investigating suspicious access to its systems in early January. The incident resulted in a week-long outage of all Illuminate’s K–12 school solutions, including IO Classroom (previously named Skedula), PupilPath, EduClimber, IO Education, SchoolCity, and others, according to its service status site. The company’s website states that its software products serve over 5,000 schools nationally with a total enrollment of about 17 million U.S. students. Hard-Hit New York Responds with Investigation of Illuminate The New York State Education Department on May 5 told THE Journal that 567 schools in the state — including “at least” 1 million current and former students — were among those impacted by the Illuminate data breach, and NYSED data privacy officials opened an investigation on April 1.

The list of all New York schools impacted by the data breach was sent to THE Journal in response to a Freedom of Information request; NYSED officials said the list came from Illuminate. Each impacted district was working to confirm how many current and former students were among those whose data were compromised, and each is required by law to report those totals to NYSED, so the total number of students affected was expected to grow, the department said." For original publication, please visit: https://thejournal.com/articles/2022/05/27/illuminate-breach-included-los-angeles-riverside-county-pushing-total-impacted-well-over-2-million.aspx?m=1

By Lonnie Lee Hood "Colleges and universities are increasingly using digital tools to prevent cheating during online exams, since so many students are taking class from home or their dorm rooms in the era of COVID-19.

The programs — prominent software options include Pearson VUE and Honorlock — analyze imagery from students' webcams to detect behavior that might be linked to cheating.

Needless to say, there are pain points.

University of Kentucky professor Josef Fruehwald, for instance, said in a popular video on TikTok that he wouldn't trust educators who use the software, prompting 2.3 million views and dozens of comments from stressed out students. "One of my French exams got flagged for cheating because I was crying for the whole thing and my French prof had to watch 45 min of me quietly sobbing," one user replied. "Since COVID, LSAT uses a proctoring system," another said. "I was yelled at for having a framed quote from my grandmother on the wall." No less harrowing, one student said a proctor asked them to change into "something more conservative" during the exam, in the student's own home.

Fruehwald got so many responses he made a Twitter thread about it — whereupon tweeps started sharing even more allegations. "My husband has two classes left for his BFA and one of them is a math class that requires an assessment test before enrolling," wrote one person. "He should have graduated two years ago but he couldn't take the friggin math class because THE SOUND OF HIS LAPTOP'S FAN SET OFF THE PROCTOR SOFTWARE."

Representatives of the anti-cheating software market did push back.

"Honorlock uses facial detection and ensures certain facial landmarks are present in the webcam during the assessment," said Honorlock's chief marketing officer Tess Mitchell, after this story was initially published. "Honorlock records the student’s webcam, so crying is visible, however, crying does not trigger a flag or proctor intervention."

Eye tracking software isn't exactly knocking it out of the park in the public opinion lately. One startup is forcing people to watch ads with their eyelids all the way open, and another is offering crypto in exchange for eyeball time. The pandemic has changed a lot about the way society runs, and education seems to be a particularly challenged sector. As teachers quit jobs and students say they're silently sobbing into eye tracking programs on a computer screen, it's not hard to see why.

Updated with additional context and a statement from Honorlock. For original post, please visit: https://futurism.com/college-students-exam-software-cheating-eye-tracking-covid?taid=62713f29ee8b820001167731

The Federal Trade Commission is planning to scrutinize educational technology in its enforcement of children’s online privacy rules. By Andrea Vittorio

"The Federal Trade Commission is planning to scrutinize educational technology in its enforcement of children’s online privacy rules. The commission is slated to vote at a May 19 meeting on a policy statement related to how the Children’s Online Privacy Protection Act applies to edtech tools, according to an agenda issued Thursday. The law, known as COPPA, gives parents control over what information online platforms can collect about their kids. Parents concerned about data that digital learning tools collect from children have called for stronger oversight of technology increasingly used in schools.

The FTC’s policy statement “makes clear that parents and schools must not be required to sign up for surveillance as a condition of access to tools needed to learn,” the meeting notice said.

It’s the first agency meeting since George University law professor Alvaro Bedoya was confirmed as a member of the five-seat commission, giving Chair Lina Khan a Democratic majority needed to pursue policy goals. Bedoya has said he wants to strengthen protections for children’s digital data.

Companies that violate COPPA can face fines from the FTC. Past enforcement actions under the law have been brought against companies including TikTok and Google’s YouTube.

Alphabet Inc.‘s Google has come under legal scrutiny for collecting data on users of its educational tools and relying on schools to give consent for data collection on parents’ behalf.

New Mexico’s attorney general recently settled a lawsuit against Google that alleged COPPA violations. Since the suit was filed in 2020, Google has launched new features to protect children’s data.

"Facial recognition technology doesn’t just allow children to make cashless payments – it can gauge their mood and behaviour in class" By Stephanie Hare "A few days ago, a friend sent me a screenshot of an online survey sent by his children’s school and a company called ParentPay, which provides technology for cashless payments in schools. “To help speed up school meal service, some areas of the UK are trialling using biometric technology such as facial identity scanners to process payments. Is this something you’d be happy to see used in your child’s school?” One of three responses was allowed: yes, no and “I would like more information before agreeing”.

My friend selected “no”, but I wondered what would have happened if he had asked for more information before agreeing. Who would provide it? The company that stands to profit from his children’s faces? Fortunately, Defend Digital Me’s report, The State of Biometrics 2022: A Review of Policy and Practice in UK Education, was published last week, introduced by Fraser Sampson, the UK’s biometrics and surveillance camera commissioner. It is essential reading for anyone who cares about children. First, it reminds us that the Protection of Freedoms Act 2012, which protects children’s biometrics (such as face and fingerprints), applies only in England and Wales. Second, it reveals that the information commissioner’s office has still not ruled on the use of facial recognition technology in nine schools in Ayrshire, which was reported in the media in October 2021, much less the legality of the other 70 schools known to be using the technology across the country. Third, it notes that the suppliers of the technology are private companies based in the UK, the US, Canada and Israel.

One of the suppliers, CRB Cunninghams, advertises that it scans children’s faces every three months The report also highlights some gaping holes in our knowledge about the use of facial recognition technology in British schools. For instance, who in government approved these contracts? How much has this cost the taxpayer? Why is the government using a technology that is banned in several US states and which regulators in France, Sweden, Poland and Bulgaria have ruled unlawful on the grounds that it is neither necessary nor proportionate and does not respect children’s privacy? Why are British children’s rights not held to the same standard as their continental counterparts? The report also warns that this technology does not just identify children or allow them to transact with their bodies. It can be used to assess their classroom engagement, mood, attentiveness and behaviour. One of the suppliers, CRB Cunninghams, advertises that it scans children’s faces every three months and that its algorithm “constantly evolves to match the child’s growth and change of appearance”.

So far, MPs have been strikingly silent on the use of such technology in schools. Instead, two members of the House of Lords have sounded the alarm. In 2019, Lord Clement-Jones put forward a private member’s bill for a moratorium and review of all uses of facial recognition technology in the UK. The government has yet to give this any serious consideration. Undaunted, his colleague Lord Scriven said last week that he would put forward a private member’s bill to ban its use in British schools.

It’s difficult not to wish the two lords well when you return to CRB Cunninghams’ boasts about its technology. “The algorithm grows with the child,” it proclaims. That’s great, then: what could go wrong?

Stephanie Hare is a researcher and broadcaster. Her new book is Technology Is Not Neutral: A Short Guide to Technology Ethics Photograph: Getty Images/iStockphoto For original post, please visit: https://www.theguardian.com/commentisfree/2022/may/08/face-up-to-it-this-surveillance-of-kids-in-schools-is-creepy

By Kyle M. L. Jones (MLIS, PhD)

Indiana University-Indianapolis (IUPUI) "The COVID-19 pandemic changed American higher education in more ways than many people realize: beyond forcing schools to transition overnight to fully online learning, the health crisis has indirectly fueled institutions’ desire to datafy students in order to track, measure, and intervene in their lives. Higher education institutions now collect enormous amounts of student data, by tracking students’ performance and behaviors through learning management systems, learning analytic systems, keystroke clicks, radio frequency identification, and card swipes throughout campus locations. How do institutions use all this data, and what are the implications for student data privacy? Are the technologies as effective as institutions claim? This blog explores these questions and calls for higher education institutions to better protect students, their individuality, and their power to make the best choices for their education and lives.

When the pandemic prevented faculty and students from accessing their common campus haunts, including offices and classrooms, they relied on technologies to fill their information, communication, and education needs. Higher education was arguably better prepared than other organizations and institutions for immersive online education. For decades, universities and colleges have invested significant resources in networking infrastructures and applications to support constant communication and information sharing. Educational technologies, such as learning management systems (LMSs) licensed by Instructure (Canvas) and Blackboard, and productivity tools such as Microsoft’s Office365 are ubiquitous in higher education. So, while the transition to online education was difficult for some in pedagogical terms, the technological ability to do so was not: higher education was prepared. Datafication Explained: How Institutions Quantify Students The same technological ubiquity that has helped higher education succeed during the pandemic has also fueled institutions’ growing desire to datafy students for the purposes of observing, measuring, and intervening in their lives. These practices are not new to universities and colleges, who have long held that creating education records about students supports administrative record keeping and instruction. But data and informational conditions today are much different than just 10 to 20 years ago: the ability to track, capture, and analyze a student’s online information behaviors, communications, and system actions (e.g., clicks, keystrokes, facial movements), not to mention their granular academic history, is possible.

In non-pandemic times, when students are immersed in campus life, myriad sensors (e.g., WiFi, RFID) and systems (e.g., building and transactional card swipes) associated with a specific location also make it possible to analyze a student’s physical movements. These data points enable institutions to track where a student has been and with whom that student has associated, by examining similar patterns in the data.

How are institutions and the educational technology (edtech) companies they rely on using their growing stores of data? There have been infamous cases over the years, such as the Mount St. Mary’s “drown the bunnies” fiasco, when the previous president attempted to use predictive measures to identify and force out students unlikely to achieve academic success and be retained. Then-president Simon Newman, who was eventually fired, argued, “This is hard for [the faculty] because you think of the students as cuddly bunnies, but you can’t…. You just have to drown the bunnies…. put a Glock to their heads.” At the University of Arizona, its “Smart Campus research” aims to “repurpose the data already being captured from student ID cards to identify those most at risk for not returning after their first year of college.” It used student ID card data to track and measure social interactions through time-stamp and geolocation metadata. The analysis enabled the university to map student interactions and their social networks, all for the purpose of predicting a student’s likelihood of being retained.

Edtech has also invested heavily in descriptive and predictive analytic capabilities, sometimes referred to as learning analytics. Common LMSs often record and share descriptive statistics with instructors concerning which pages and resources (e.g., PDFs, quizzes, etc.) a student has clicked on; some instructors use the data to create visualizations to make students aware of their engagement levels in comparison to their peers in a course. Other companies use their access to real-time system data and the students who create the data, to run experiments. Pearson gained attention for its use of social-psychological interventions on over 9,000 students at 165 institutions to test “whether students who received the messages attempted and completed more problems than their counterparts at other institutions.” While some characterize Pearson’s efforts as simple A/B testing, often used to examine interface tweaks on websites and applications, Pearson did the interventions based on its own ethical review, without input from any of the 165 institutions and without students’ consent. Is Datafication Worth It? Privacy Considerations The higher education data ecosystem and the paths it opens for universities, edtech, and other third-party actors to use it raises significant questions about the effects on students’ privacy. The datafication of student life may lead institutions to improve student learning as well as retention and graduation rates. Maybe studying student life at a granular, identifiable level, or even at broader subgroup levels, improves institutional decision making and improves an institution’s financial situation. But what are the costs of these gains? The examples above, many of which I have more comprehensively summarized and analyzed elsewhere, point to clear issues.

Chief among them is privacy. It is not normative for institutions—or the companies they contract for services—to expose a student’s life, regardless of the purposes or justifications. Yet, universities and colleges continue to push the point that they can do so and are often justified in doing so if it improves student success. But student success is a broad term. Whose success matters and warrants the intrusion? Often an analytic, especially a predictive measure, requires historical data, meaning that one student’s life is made analyzable only for another student downstream to benefit months or years later. And how do institutions define success? Student success may be learning gains, but education institutions often construe it as retention and graduation, which are just proxies.

When institutions datafy student life for some purpose other than to directly help students, they treat students as objects—not human beings with unique interests, goals, and autonomy over their lives. Institutions and others can use data and related artifacts to guide, nudge, and even manipulate student choices with an invisible hand, since students are rarely aware of the full reach of an institution’s data infrastructure. Students trust that institutions will protect identifiable data and information, but that trust is misplaced if institutions 1) are not transparent about their data practices and 2) do not enable students to make their own privacy choices to the greatest extent possible. Student privacy policy is often difficult for students to understand and locate. Moreover, institutions need to justify their analytic practices. They should provide an overview of the intention of their practice and explain the empirical support for that justification. If the practice is experimental, institutions must communicate that they have no clear evidence that the practice will produce benefits for students. If science supports the practice, institutions should provide that science to students to review and summarize.

Many other policy and practice recommendations are relevant, as the literature outlines ethics codes, philosophical arguments, and useful principles for practice. The key point here is that the datafication of student life and the privacy problems it creates are justified only if higher education institutions protect students and put their interests first, treat students as humans, and respect their choices about their lives." To view original post, please visit: https://studentprivacycompass.org/the-datafication-of-student-life-and-the-consequences-for-student-data-privacy/

|

By Natasha Singer "The Federal Trade Commission on Monday cracked down on Chegg, an education technology firm based in Santa Clara, Calif., saying the company’s “careless” approach to cybersecurity had exposed the personal details of tens of millions of users. In a legal complaint, filed on Monday morning, regulators accused Chegg of numerous data security lapses dating to 2017. Among other problems, the agency said, Chegg had issued root login credentials, essentially an all-access pass to certain databases, to multiple employees and outside contractors. Those credentials enabled many people to look at user account data, which the company kept on Amazon Web Services’ online storage system. As a result, the agency said, a former Chegg contractor was able to use company-issued credentials to steal the names, email addresses and passwords of about 40 million users in 2018. In certain cases, sensitive details on students’ religion, sexual orientation, disabilities and parents’ income were also taken. Some of the data was later found for sale online. Chegg’s popular homework help app is used regularly by millions of high school and college students. To settle the F.T.C.’s charges, the agency said Chegg had agreed to adopt a comprehensive data security program. In a statement, Chegg said data privacy was a top priority for the firm and that the company had worked with the F.T.C. to reach a settlement agreement. The company said it currently has robust security practices, and that the incidents described in the agency’s complaint had occurred more than two years ago. Only a small percentage of users had provided data on their religion and sexual orientation as part of a college scholarship finder feature, the company said in the statement.

“Chegg is wholly committed to safeguarding users’ data and has worked with reputable privacy organizations to improve our security measures and will continue our efforts,” the statement said. The F.T.C.’s enforcement action against Chegg, a prominent industry player, amounts to a warning to the U.S. education technology industry. Since the early days of the pandemic in 2020, the education technology sector has enjoyed a surge in customers and revenue. To enable remote learning, many schools and universities rushed to adopt digital tools like exam-proctoring software, course management platforms and video meeting systems. Students and their families, too, turned in droves to online tutoring services and study aids like math apps. Among them, Chegg, which had a market capitalization of $2.7 billion at the end of trading on Monday, reported annual revenues of $776 million for 2021, an increase of 20 percent from the previous year. Some online learning systems proved so useful that many students, and their educational institutions, continued to use the tools even after schools and colleges returned to in-person teaching.

But the fast growth of digital learning tools during the pandemic also exposed widespread flaws. Many online education services record, store and analyze a trove of data on students’ every keystroke, swipe and click — information that can include sensitive details on children’s learning challenges or precise locations. Privacy and security experts have warned that such escalating surveillance may benefit companies more than students.

In March, Illuminate Education, a leading provider of student-tracking software, reported a cyberattack on certain company databases. The incident exposed the personal information of more than a million current and former students across dozens of districts in the United States — including New York City, the nation’s largest public school system.

In May, the F.T.C. issued a policy statement saying that it planned to crack down on ed tech companies that collected excessive personal details from schoolchildren or failed to secure students’ personal information.

The F.T.C. has a long history of fining companies for violating children’s privacy on services like YouTube and TikTok. The agency is able to do so under a federal law, the Children’s Online Privacy Protection Act, which requires online services aimed at children under 13 to safeguard youngsters’ personal data and obtain parental permission before collecting it.

But the federal complaint against Chegg represents the first case under the agency’s new campaign focused specifically on policing the ed-tech industry and protecting student privacy. In the Chegg case, the homework help platform is not aimed at children, and the F.T.C. did not invoke the children’s privacy law. The agency accused the company of unfair and deceptive business practices.

Chegg was founded in 2005 as a textbook rental service for college students. Today it is an online learning giant that rents e-textbooks. But it is most known as a homework help platform where, for $15.95 per month, students can find ready answers to millions of questions on course topics like relativity or mitosis. Students may also ask Chegg’s online experts to answer specific study or test questions they have been assigned.

Teachers have complained that the service has enabled widespread cheating. Students even have a nickname for copying answers from the platform: “chegging.”

Chegg’s privacy policy promised users that the company would take “commercially reasonable security measures to protect” their personal information. Chegg’s scholarship finder service, for instance, collected information like students’ birth dates as well as details on their religion, sexual orientation and disabilities, the F.T.C. said. But regulators said the company failed to use reasonable security measures to protect user data, even after a series of security lapses that enabled intruders to gain access to sensitive student data and employees’ financial information.

As part of the consent agreement proposed by the F.T.C., Chegg must provide security training to employees and encrypt user data. Chegg must also give consumers access to the personal information it has collected about them — including any precise location data or persistent identifiers like IP addresses — and enable users to delete their records.

Other online learning services may also hear from regulators. The F.T.C. disclosed in July that it was pursuing a number of nonpublic investigations into ed tech providers.

“Chegg took shortcuts with millions of students’ sensitive information,” Samuel Levine, the director of the agency’s Bureau of Consumer Protection, said in a news release on Monday. “The commission will continue to act aggressively to protect personal data.”

By Mahima Arya and Nina Loshkajian "In 2020, New York became a national civil rights leader, the first state in the country to ban facial recognition in schools. But almost two years later, state officials are examining whether to reverse course and give a passing grade to this failing technology. Wasting money on biased and faulty tech will only make schools a harsher, more dangerous environment for students, particularly students of color, LGBTQ+ students, immigrant students, and students with disabilities. Preserving the statewide moratorium on biometric surveillance in schools will protect our kids from racially biased, ineffective, unsecure and dangerous tech. Biometric surveillance depends on artificial intelligence, and human bias infects AI systems. Facial recognition software programmed to only recognize two genders will leave transgender and nonbinary individuals invisible. A security camera that learns who is “suspicious looking” using pictures of inmates will replicate the systemic racism that results in the mass incarceration of Black and brown men. Facial recognition systems may be up to 99 percent accurate on white men, but can be wrong more than one-in-three times for some women of color. What’s worse, facial recognition technology has even higher inaccuracy rates when used on students. Voice recognition software, another widely known biometric surveillance tool, echoes this pattern of poor accuracy for those who are nonwhite, non-male, or young. The data collected by biometric surveillance technologies is vulnerable to a variety of security threats, including hacking, data breaches and insider attacks. This data – which includes scans of facial features, fingerprints, and irises – is unique and highly sensitive, making it a valuable target for hackers and, once compromised, impossible to reissue like you would a password or PIN. Collecting and storing biometric data in schools, which tend to have inadequate cybersecurity practices, puts children at great risk of being tracked and targeted by malicious actors. There is absolutely no need to expose children to these privacy and safety risks. The types of biometric surveillance technology being marketed to schools are widely recognized as dangerous. One particularly controversial vendor of facial recognition technology, Clearview AI, has reportedly tested or implemented its systems in more than 50 educational institutions across 24 states. Other countries have started to appreciate the threat Clearview poses to privacy, with Australia recently ordering it to cease its scraping of images. And last year, privacy groups in Austria, France, Greece, Italy and the U.K. filed legal complaints against Clearview. All while the company continues to market its products to schools in the U.S. As the world begins to wake up to the risks of using facial recognition, New York should not make the mistake of allowing young kids to be subjected to its harms. Additionally, one study found that CCTV systems in U.K. secondary schools led many students to suppress their expressions of individuality and alter their behavior. Normalizing biometric surveillance will bring about a bleak future for kids at schools across the country. New York shouldn’t waste money on tech that criminalizes and harms young people. Most school shootings are committed by current students or alumni of the school in question, faces of whom would not be flagged as suspicious by facial recognition systems. And even if the technology were to flag a real potential perpetrator of violence, given the speed at which most school shootings usually come to an end, it is unlikely that law enforcement would be notified and able to arrive to the scene in time to prevent such horrendous acts.

Students, parents and stakeholders have the opportunity to submit a brief survey to let the State Education Department know that they want facial recognition and other biased AI out of their schools, not just temporarily but permanently. New York must at least extend the moratorium on biometric surveillance in schools, and ultimately should put an end to the use of such problematic technology altogether."

Mahima Arya is a computer science fellow at the Surveillance Technology Oversight Project (S.T.O.P.), a human rights fellow at Humanity in Action, and a graduate of Carnegie Mellon University. Nina Loshkajian is a D.A.T.A. Law Fellow at S.T.O.P. and a graduate of New York University School of Law.

https://www.timesunion.com/opinion/article/Commentary-Keep-facial-recognition-out-of-New-17523857.php

By Joshua Brustein

"Over the course of his life, Alejo Lopez de Armentia has played video games for a variety of reasons. There was the thrill of competition, the desire for companionship, and, at base, the need to pass the time. In his 20s, feeling isolated while working for a solar panel company in Florida, he spent his evenings using video games as a way to socialize with his friends back in Argentina, where he grew up.

But 10 months ago, Armentia, who’s 39, discovered a new game, and with it a new reason to play: to earn a living. Compared with the massively multiplayer games that he usually played, Axie Infinity was remarkably simple. Players control three-member teams of digital creatures that fight one another. The characters are cartoonish blobs distinguished by their unique mixture of interchangeable body parts, not unlike a Mr. Potato Head. During “combat” they cheerily bob in place, waiting to take turns casting spells against their opponents. When a character is defeated, it becomes a ghost; when all three squad members are gone, the team loses. A match takes less than five minutes. Even many Axie regulars say it’s not much fun, but that hasn’t stopped people from dedicating hours to researching strategies, haunting Axie-themed Discord channels and Reddit forums, and paying for specialized software that helps them build stronger teams. Armentia, who’s poured about $40,000 into his habit since last August, professes to like the game, but he also makes it clear that recreation was never his goal. “I was actually hoping that it could become my full-time job,” he says.

The reason this is possible—or at least it seemed possible for a few weird months last year—is that Axie is tied to crypto markets. Players get a few Smooth Love Potion (SLP) tokens for each game they win and can earn another cryptocurrency, Axie Infinity Shards (AXS), in larger tournaments. The characters, themselves known as Axies, are nonfungible tokens, or NFTs, whose ownership is tracked on a blockchain, allowing them to be traded like a cryptocurrency as well.

There are various ways to make money from Axie. Armentia saw his main business as breeding, which doesn’t entail playing the game so much as preparing to play it in the future. Players who own Axies can create others by choosing two they already own to act as parents and paying a cost in SLP and AXS. Once they do this and wait through an obligatory gestation period, a new character appears with some combination of its parents’ traits. Every new Axie player needs Axies to play, pushing up their price. Armentia started breeding last August, at a time when normal economics seemed not to apply. “You would be making 300%, 400% on your money in five days, guaranteed,” he says. “It was stupid.”

Axie’s creator, a startup called Sky Mavis Inc., heralded all this as a new kind of economic phenomenon: the “play-to-earn” video game. “We believe in a world future where work and play become one,” it said in a mission statement on its website. “We believe in empowering our players and giving them economic opportunities. Welcome to our revolution.” By last October the company, founded in Ho Chi Minh City, Vietnam, four years ago by a group of Asian, European, and American entrepreneurs, had raised more than $160 million from investors including the venture capital firm Andreessen Horowitz and the crypto-focused firm Paradigm, at a peak valuation of about $3 billion. That same month, Axie Infinity crossed 2 million daily users, according to Sky Mavis. If you think the entire internet should be rebuilt around the blockchain—the vision now referred to as web3—Axie provided a useful example of what this looked like in practice. Alexis Ohanian, co-founder of Reddit and an Axie investor, predicted that 90% of the gaming market would be play-to-earn within five years. Gabby Dizon, head of crypto gaming startup Yield Guild Games, describes Axie as a way to create an “investor mindset” among new populations, who would go on to participate in the crypto economy in other ways. In a livestreamed discussion about play-to-earn gaming and crypto on March 2, former Democratic presidential contender Andrew Yang called web3 “an extraordinary opportunity to improve the human condition” and “the biggest weapon against poverty that we have.”

By the time Yang made his proclamations the Axie economy was deep in crisis. It had lost about 40% of its daily users, and SLP, which had traded as high as 40¢, was at 1.8¢, while AXS, which had once been worth $165, was at $56. To make matters worse, on March 23 hackers robbed Sky Mavis of what at the time was roughly $620 million in cryptocurrencies. Then in May the bottom fell out of the entire crypto market." ... For full article, please visit: https://www.bloomberg.com/news/features/2022-06-10/axie-infinity-axs-crypto-game-promised-nft-riches-gave-ruin

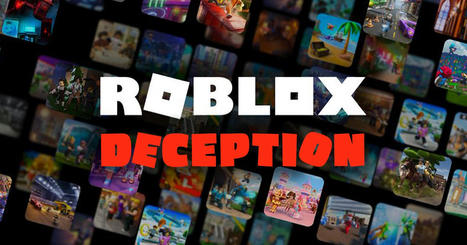

Big Tech's self-regulatory effort has long been accused of being toothless. Is that about to change? By Mark Keierleber - July 24, 2022 " A few months after education leaders at America’s largest school district announced that a technology vendor had exposed sensitive student information in a massive data breach, the company at fault — Illuminate Education — was recognized with the software industry’s equivalent of the Oscars. Since that disclosure in New York City schools, the scope of the breach has only grown, with districts in six states announcing that some 3 million current and former students had become victims. Illuminate has never disclosed the full extent of the blunder, even as critics decry significant harm to kids and security experts question why the company is being handed awards instead of getting slapped with sanctions.

Amid demands that Illuminate be held accountable for the breach — and for allegations that it misrepresented its security safeguards — the company could soon face unprecedented discipline for violating the Student Privacy Pledge, a self-regulatory effort by Big Tech to police shady business practices. In response to inquiries by The 74, the Future of Privacy Forum, a think tank and co-creator of the pledge, disclosed Tuesday that Illuminate could soon get the boot.

Forum CEO Jules Polonetsky said his group will decide within a month whether to revoke Illuminate’s status as a pledge signatory and refer the matter to state and federal regulators, including the Federal Trade Commission, for possible sanctions.

“We have been reviewing the deeply concerning circumstances of the breach and apparent violations of Illuminate Education’s pledge commitments,” Polonetsky said in a statement to The 74. Illuminate did not respond to interview requests.

In a twist, the pledge was co-created by the Software and Information Industry Association, the trade group that recognized Illuminate last month as being among “the best of the best” in education technology. The pledge, created nearly a decade ago, is designed to ensure that education technology vendors are ethical stewards of kids’ most sensitive data. Its staunchest critics have assailed the pledge as being toothless — if not an outright effort to thwart meaningful government regulation. Now, they are questioning whether its response to the massive Illuminate breach will be any different.

“I have never seen anybody get anything more than a slap on the wrist from the actual people controlling the pledge,” said Bill FItzgerald, an independent privacy researcher. Taking action against Illuminate, he said, “would break the pledge’s pretty perfect record for not actually enforcing any kind of sanctions against bad actors.”

Through the voluntary pledge, launched in 2014, hundreds of education technology companies have agreed to a slate of safety measures to protect students’ online privacy. Pledge signatories, including Illuminate, have promised they will not sell student data to third parties or use the information for targeted advertising. Companies that sign the commitment also agree to “maintain a comprehensive security program” to protect students’ personal information from data breaches.

The privacy forum, which is funded by tech companies, has long maintained that the pledge is legally binding and offers assurances to school districts as they shop for new technology. In the absence of a federal consumer privacy law, the forum argues the pledge grants “an important and unique means for privacy enforcement,” giving the Federal Trade Commission and state attorneys general an outlet to hold education technology companies accountable via consumer protection rules that prohibit unfair and deceptive business practices.

For years, critics have accused the pledge of providing educators and parents false assurances that a given product is safe, rendering it less useful than a pinky promise. Meanwhile, schools and technology companies have become increasingly entangled — particularly during the pandemic. As districts across the globe rushed to create digital classrooms, few governments checked to make sure the tech products officials endorsed were safe for children, according to a recent report by the Human Rights Watch. Shoddy student data practices by leading tech vendors, the group found, were rampant. Of the 164 tools analyzed, 89 percent “engaged in data practices that put children’s rights at risk,” with a majority giving student records to advertisers.